- Common mistakes when setting up storage arrays

- Why iSCSI is the number one storage configuration problem

- Configuring adapters for iSCSI or iSER

- How to verify your PSP matches your array requirements

- NFS best practices

- How to maximize the value of your array and virtualization

- Troubleshooting recommendations

Virtualization and Storage Networking Best Practices from the Experts

Nov 26, 2018

Find a similar article by tags

Ethernet Data Storage file storage iSCSI Networked Storage virtualizationVirtualization and Storage Networking Best Practices from the Experts

Nov 26, 2018

Ever make a mistake configuring a storage array or wonder if you’re maximizing the value of your virtualized environment? With all the different storage arrays and connectivity protocols available today, knowing best practices can help improve operational efficiency and ensure resilient operations. That’s why the SNIA Networking Storage Forum is kicking off 2019 with a live webcast “Virtualization and Storage Networking Best Practices.”

In this webcast, Jason Massae from VMware and Cody Hosterman from Pure Storage will share insights and lessons learned as reported by VMware’s storage global services by discussing:

Ever make a mistake configuring a storage array or wonder if you’re maximizing the value of your virtualized environment? With all the different storage arrays and connectivity protocols available today, knowing best practices can help improve operational efficiency and ensure resilient operations. That’s why the SNIA Networking Storage Forum is kicking off 2019 with a live webcast “Virtualization and Storage Networking Best Practices.”

In this webcast, Jason Massae from VMware and Cody Hosterman from Pure Storage will share insights and lessons learned as reported by VMware’s storage global services by discussing:

- Common mistakes when setting up storage arrays

- Why iSCSI is the number one storage configuration problem

- Configuring adapters for iSCSI or iSER

- How to verify your PSP matches your array requirements

- NFS best practices

- How to maximize the value of your array and virtualization

- Troubleshooting recommendations

Find a similar article by tags

Ethernet Data Storage file storage iSCSI Networked Storage Storage Netowrking virtualizationLeave a Reply

How Scale-Out Storage Changes Networking Demands

Oct 23, 2018

- Scale-out storage solutions and what workloads they can address

- How your network may need to evolve to support scale-out storage

- Network considerations to ensure performance for demanding workloads

- Key considerations for all flash scale-out storage solutions

Leave a Reply

How Scale-Out Storage Changes Networking Demands

Oct 23, 2018

- Scale-out storage solutions and what workloads they can address

- How your network may need to evolve to support scale-out storage

- Network considerations to ensure performance for demanding workloads

- Key considerations for all flash scale-out storage solutions

Leave a Reply

Introducing the Networking Storage Forum

Oct 9, 2018

At SNIA, we are dedicated to staying on top of storage trends and technologies to fulfill our mission as a  globally recognized and trusted authority for storage leadership, standards, and technology expertise. For the last several years, the Ethernet Storage Forum has been working hard to provide high quality educational and informational material related to all kinds of storage.

globally recognized and trusted authority for storage leadership, standards, and technology expertise. For the last several years, the Ethernet Storage Forum has been working hard to provide high quality educational and informational material related to all kinds of storage.

From our "Everything You Wanted To Know About Storage But Were Too Proud To Ask" series, to the absolutely phenomenal (and required viewing) "Storage Performance Benchmarking" series to the "Great Storage Debates" series, we've produced dozens of hours of material.

Technologies have evolved and we've come to a point where there's a need to understand how these systems and architectures work – beyond just the type of wire that is used. Today, there are new systems that are bringing storage to completely new audiences. From scale-up to scale-out, from disaggregated to hyperconverged, RDMA, and NVMe-oF - there is more to storage networking than just your favorite transport. For example, when we talk about NVMe™ over Fabrics, the protocol is broader than just one way of accomplishing what you need. When we talk about virtualized environments, we need to examine the nature of the relationship between hypervisors and all kinds of networks. When we look at "Storage as a Service," we need to understand how we can create workable systems from all the tools at our disposal. Bigger Than Our Britches As I said, SNIA's Ethernet Storage Forum has been working to bring these new technologies to the forefront, so that you can see (and understand) the bigger picture. To that end, we realized that we needed to rethink the way that our charter worked, to be even more inclusive of technologies that were relevant to storage and networking. So... Introducing the Networking Storage Forum. In this group we're going to continue producing top-quality, vendor-neutral material related to storage networking solutions. We'll be talking about:- Storage Protocols (iSCSI, FC, FCoE, NFS, SMB, NVMe-oF, etc.)

- Architectures (Hyperconvergence, Virtualization, Storage as a Service, etc.)

- Storage Best Practices

- New and developing technologies

- Visit: snia.org/nsf

- Follow: @SNIANSF

- Subscribe: sniansfblog_org_org.org

- Watch: SNIAVideo Network Storage Playlist

Find a similar article by tags

Ethernet Data Storage FCoE Fibre Channel iSCSI Networked Storage NFS NVMe RDMALeave a Reply

Introducing the Networking Storage Forum

Oct 9, 2018

At SNIA, we are dedicated to staying on top of storage trends and technologies to fulfill our mission as a  globally recognized and trusted authority for storage leadership, standards, and technology expertise. For the last several years, the Ethernet Storage Forum has been working hard to provide high quality educational and informational material related to all kinds of storage.

globally recognized and trusted authority for storage leadership, standards, and technology expertise. For the last several years, the Ethernet Storage Forum has been working hard to provide high quality educational and informational material related to all kinds of storage.

From our “Everything You Wanted To Know About Storage But Were Too Proud To Ask” series, to the absolutely phenomenal (and required viewing) “Storage Performance Benchmarking” series to the “Great Storage Debates” series, we’ve produced dozens of hours of material.

Technologies have evolved and we’ve come to a point where there’s a need to understand how these systems and architectures work – beyond just the type of wire that is used. Today, there are new systems that are bringing storage to completely new audiences. From scale-up to scale-out, from disaggregated to hyperconverged, RDMA, and NVMe-oF – there is more to storage networking than just your favorite transport. For example, when we talk about NVMe over Fabrics, the protocol is broader than just one way of accomplishing what you need. When we talk about virtualized environments, we need to examine the nature of the relationship between hypervisors and all kinds of networks. When we look at “Storage as a Service,” we need to understand how we can create workable systems from all the tools at our disposal.

Bigger Than Our Britches

As I said, SNIA’s Ethernet Storage Forum has been working to bring these new technologies to the forefront, so that you can see (and understand) the bigger picture. To that end, we realized that we needed to rethink the way that our charter worked, to be even more inclusive of technologies that were relevant to storage and networking.

So…

Introducing the Networking Storage Forum. In this group we’re going to continue producing top-quality, vendor-neutral material related to storage networking solutions. We’ll be talking about:

over Fabrics, the protocol is broader than just one way of accomplishing what you need. When we talk about virtualized environments, we need to examine the nature of the relationship between hypervisors and all kinds of networks. When we look at “Storage as a Service,” we need to understand how we can create workable systems from all the tools at our disposal.

Bigger Than Our Britches

As I said, SNIA’s Ethernet Storage Forum has been working to bring these new technologies to the forefront, so that you can see (and understand) the bigger picture. To that end, we realized that we needed to rethink the way that our charter worked, to be even more inclusive of technologies that were relevant to storage and networking.

So…

Introducing the Networking Storage Forum. In this group we’re going to continue producing top-quality, vendor-neutral material related to storage networking solutions. We’ll be talking about:

- Storage Protocols (iSCSI, FC, FCoE, NFS, SMB, NVMe-oF, etc.)

- Architectures (Hyperconvergence, Virtualization, Storage as a Service, etc.)

- Storage Best Practices

- New and developing technologies

- Visit: snia.org/nsf

- Follow: @SNIANSF

- Subscribe: sniansfblog_org_org.org

- Watch: SNIAVideo Network Storage Playlist

Find a similar article by tags

Ethernet Data Storage FCoE Fibre Channel iSCSI Networked Storage NFS NVMe RDMALeave a Reply

Storage Expert Takes on Hyperconverged Questions

Apr 17, 2017

Find a similar article by tags

Compute Converged Infrastructure Ethernet Data Storage Hyperconverged Networked Storage Networking StorageLeave a Reply

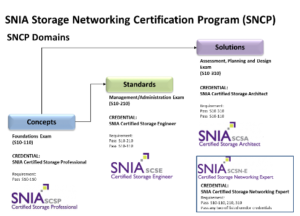

SNIA Ranked #2 for Storage Certifications - and Now You Can Take Exams at 900 Locations Worldwide

Mar 29, 2017

Should you become certified? With heterogeneous data centers as the de facto environment today, IT certification can be of great value especially if it’s in a career area where you are trying to advance, and even more importantly if it is a vendor-neutral certification which complements specific product skills. And with surveys saying IT storage professionals may anticipate six-figure salaries, going for certification seems like a good idea. Don't just take our word for it - CIO Magazine has cited SNIA Certified Storage Networking Expert (SCSN-E) as #2 of their top seven storage certifications - and the way to join an elite group of storage professionals at the top of their games.

SNIA now makes it even easier to take its three exams – Foundations; Management/Administration; and Assessment, Planning, and Design. Exams are now available for on-site test takers globally via a new relationship with Kryterion Testing Network. The Kryterion Testing Network utilizes over 900 Testing Centers in 120 countries to securely proctor exams worldwide for SNIA Certification Exam candidates. If you would like to know more about Kryterion or locate your nearest testing center please go to: www.kryteriononline.com/Locate-Test-Center . For more information about SNIA’s SNCP, visit https://www.snia.org/education/certification.

Should you become certified? With heterogeneous data centers as the de facto environment today, IT certification can be of great value especially if it’s in a career area where you are trying to advance, and even more importantly if it is a vendor-neutral certification which complements specific product skills. And with surveys saying IT storage professionals may anticipate six-figure salaries, going for certification seems like a good idea. Don't just take our word for it - CIO Magazine has cited SNIA Certified Storage Networking Expert (SCSN-E) as #2 of their top seven storage certifications - and the way to join an elite group of storage professionals at the top of their games.

SNIA now makes it even easier to take its three exams – Foundations; Management/Administration; and Assessment, Planning, and Design. Exams are now available for on-site test takers globally via a new relationship with Kryterion Testing Network. The Kryterion Testing Network utilizes over 900 Testing Centers in 120 countries to securely proctor exams worldwide for SNIA Certification Exam candidates. If you would like to know more about Kryterion or locate your nearest testing center please go to: www.kryteriononline.com/Locate-Test-Center . For more information about SNIA’s SNCP, visit https://www.snia.org/education/certification.

Find a similar article by tags

Education Network Networked Storage Networking Standards Storage Storage TermsLeave a Reply

Latency Budgets for Solid State Storage Access

Mar 7, 2017

New solid state storage technologies are forcing the industry to refine distinctions between networks and other types of system interconnects. The question on everyone’s mind is: when is it beneficial to use networks to access solid state storage, particularly persistent memory?

It’s not quite as simple as a “yes/no” answer. The answer to this question involves application, interconnect, memory technology and scalability factors that can be analyzed in the context of a latency budget.

On April 19th, Doug Voigt, Chair SNIA NVM Programming Model Technical Work Group, returns for a live SNIA Ethernet Storage Forum webcast, “Architectural Principles for Networked Solid State Storage Access – Part 2” where we will explore latency budgets for various types of solid state storage access. These can be used to determine which combinations of interconnects, technologies and scales are compatible with Load/Store instruction access and which are better suited to IO completion techniques such as polling or blocking.

In this webcast you’ll learn:

- Why latency is important in accessing solid state storage

- How to determine the appropriate use of networking in the context of a latency budget

- Do’s and don’ts for Load/Store access

This is a technical seminar built upon part 1 of this series. If you missed it, you can view it on demand at your convenience. It will give you a solid foundation on this topic, outlining key architectural principles that allow us to think about the application of networked solid state technologies more systematically.

I hope you will register today for the April 19th event. Doug and I will be on hand to answer questions on the spot.

Find a similar article by tags

Latency Network Networked Storage Persistent Memory Solid State StorageLeave a Reply

Clearing Up Confusion on Common Storage Networking Terms

Jan 12, 2017

Do you ever feel a bit confused about common storage networking terms? You’re not alone. At our recent SNIA Ethernet Storage Forum webcast “Everything You Wanted To Know About Storage But Were Too Proud To Ask – Part Mauve,” we had experts from Cisco, Mellanox and NetApp explain the differences between:

- Channel vs. Busses

- Control Plane vs. Data Plane

- Fabric vs. Network

If you missed the live webcast, you can watch it on-demand. As promised, we’re also providing answers to the questions we got during the webcast. Between these questions and the presentation itself, we hope it will help you decode these common, but sometimes confusing terms.

And remember, the “Everything You Wanted To Know About Storage But Were Too Proud To Ask” is a webcast series with a “colorfully-named pod” for each topic we tackle. You can register now for our next webcast: Part Teal, The Buffering Pod, on Feb. 14th.

Q. Why do we have Fibre and Fiber

A. Fiber Optics is the term used for the optical technology used by Fibre Channel Fabrics. While a common story is that the “Fibre” spelling came about to accommodate the French (FC is after all, an international standard), in actuality, it was a marketing idea to create a more unique name, and in fact, it was decided to use the British spelling – “Fibre”.

Q. Will OpenStack change all the rules of the game?

A. Yes. OpenStack is all about centralizing the control plane of many different aspects of infrastructure.

Q. The difference between control and data plane matters only when we discuss software defined storage and software defined networking, not in traditional switching and storage.

A. It matters regardless. You need to understand how much each individual control plane can handle and how many control planes you have from a overall management perspective. In the case were you have too many control planes SDN and SDS can be a benefit to you.

Q. As I’ve heard that networks use stateless protocols, would FC do the same?

A. Fibre Channel has several different Classes, which can be either stateful or stateless. Most applications of Fibre Channel are Class 3, as it is the preferred class for SCSI traffic, A connection between Fibre Channel endpoints is always stateful (as it involves a login process to the Fibre Channel fabric). The transport protocol is augmented by Fibre Channel exchanges, which are managed on a per-hop basis. Retransmissions are handled by devices when exchanges are incomplete or lost, meaning that each exchange is a stateful transmission, but the protocol itself is considered stateless in modern SCSI-transport Fibre Channel.

iSCSI, as a connection-oriented protocol, creates a nexus between an initiator and a target, and is considered stateful. In addition, SMB, NFSv4, ftp, and TCP are stateful protocols, while NFSv2, NFSv3, http, and IP are stateless protocols.

Q. Where do CIFS/SMB come into the picture?

A. CIFFS/SMB is part of a network stack. We need to have a separate talk about network stacks and their layers. In this presentation, we were talking primarily about the physical layer of the networks and fabrics. To overly simplify network stacks, there are multiple layers of protocols that run on top of the physical layer. In the case of FC, those protocols include the control plane protocols (such as FC-SW), and the data plane protocols. In FC, the most common data plane protocol is FCP (used by SCSI, FICON, and FC-NVMe). In the case of Ethernet, those protocols also include the control plan (such as TCP/IP), and data plane protocols. In Ethernet, there are many commonly used data plane protocols for storage (such as iSCSI, NFS, and CIFFS/SMB)

Leave a Reply